Semantic Search Principles

Understand how embeddings capture meaning, similarity metrics, and the mathematics behind semantic vector spaces.

Semantic Search Principles

Master semantic search with free flashcards and spaced repetition practice. This lesson covers vector embeddings, similarity metrics, and neural retrieval models—essential concepts for building modern AI-powered search systems that understand meaning rather than just matching keywords.

Welcome to Semantic Search 🔍

Traditional keyword-based search systems match exact words, but they struggle when users express the same idea differently. Semantic search revolutionizes information retrieval by understanding the meaning behind queries and documents, not just their literal text. This breakthrough enables search engines to return relevant results even when exact keywords don't match.

In this lesson, you'll discover how modern AI systems transform text into mathematical representations, measure conceptual similarity, and retrieve information based on intent rather than syntax. These principles power everything from Google's search algorithms to enterprise knowledge bases and conversational AI assistants.

Core Concepts: Understanding Semantic Search 🧠

What Is Semantic Search?

Semantic search is an information retrieval approach that understands the contextual meaning and intent behind search queries, rather than performing simple keyword matching. It leverages natural language processing (NLP) and machine learning to interpret concepts, relationships, and user intent.

Traditional vs. Semantic Search:

| Aspect | Traditional Keyword Search | Semantic Search |

|---|---|---|

| Matching Method | Exact text matching (lexical) | Meaning-based matching (semantic) |

| Query Understanding | "dog food" finds exact phrase | "dog food" also finds "canine nutrition", "pet meals" |

| Synonym Handling | Misses synonyms without manual rules | Automatically recognizes related terms |

| Context Awareness | "apple" returns all mentions | Distinguishes Apple (company) vs. apple (fruit) from context |

| Complexity | Simple, fast, deterministic | Complex, requires ML models, probabilistic |

💡 Key Insight: Semantic search bridges the "vocabulary gap"—the problem where users and document authors use different words to express the same concept.

Vector Embeddings: The Foundation 📐

At the heart of semantic search lies vector embeddings—numerical representations of text that capture semantic meaning. Each piece of text (word, sentence, or document) is transformed into a point in high-dimensional space, where semantically similar items cluster together.

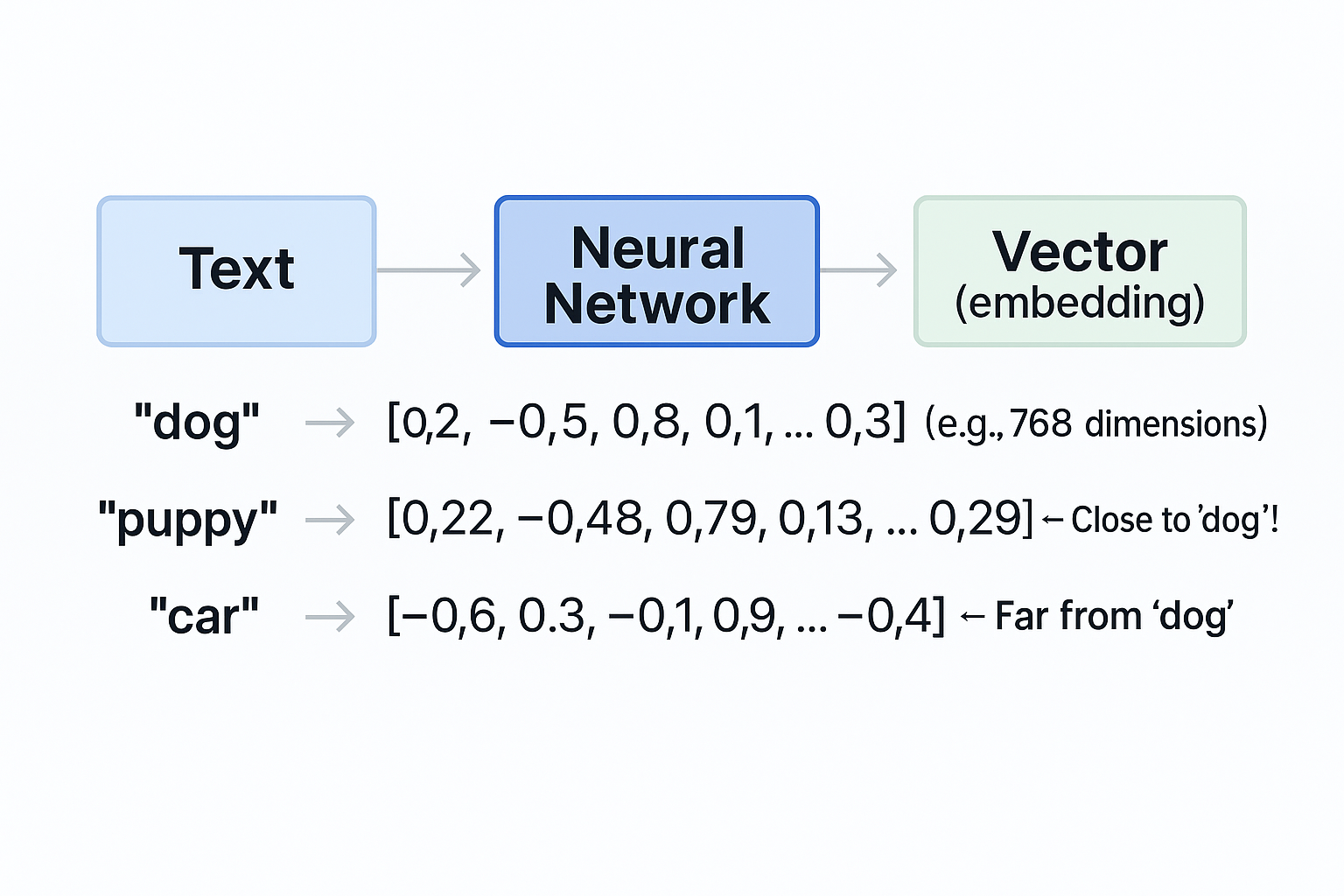

How Embeddings Work:

View original ASCII

Text → Neural Network → Vector (embedding)"dog" → [0.2, -0.5, 0.8, 0.1, ..., 0.3] (e.g., 768 dimensions) "puppy" → [0.22, -0.48, 0.79, 0.13, ..., 0.29] ← Close to "dog"! "car" → [-0.6, 0.3, -0.1, 0.9, ..., -0.4] ← Far from "dog"

Popular Embedding Models:

- Word2Vec (2013): Word-level embeddings, learns from word co-occurrence

- GloVe (2014): Global vectors capturing corpus statistics

- BERT (2018): Contextual embeddings that change based on surrounding words

- Sentence-BERT (2019): Optimized for sentence and paragraph similarity

- OpenAI Ada (2022): State-of-the-art embeddings via transformer models

🔬 Technical Detail: Modern embeddings typically have 384-1536 dimensions. Each dimension captures abstract semantic features learned from massive text corpora.

Similarity Metrics: Measuring Semantic Closeness 📏

Once text is converted to vectors, we need mathematical methods to measure how "similar" two embeddings are. The most common metrics:

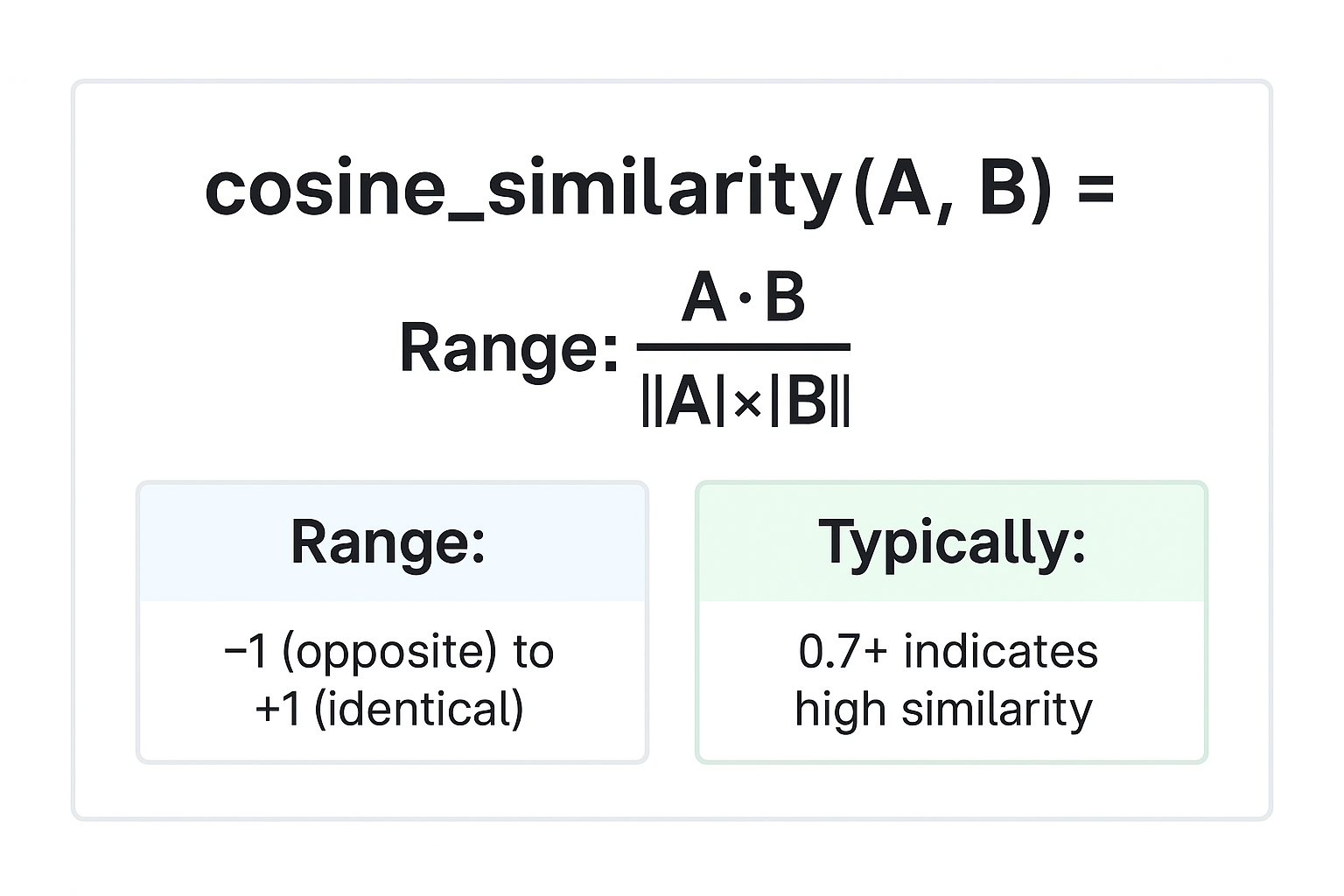

1. Cosine Similarity

Measures the angle between two vectors, ignoring magnitude. Most popular for text embeddings.

View original ASCII

cosine_similarity(A, B) = (A · B) / (||A|| × ||B||)Range: -1 (opposite) to +1 (identical) Typically: 0.7+ indicates high similarity

Why cosine? It focuses on direction (meaning) rather than length (document size), making it ideal for comparing texts of different lengths.

2. Euclidean Distance

Straight-line distance between vector endpoints.

euclidean_distance(A, B) = √(Σ(Aᵢ - Bᵢ)²) Range: 0 (identical) to ∞ (very different) Smaller = more similar

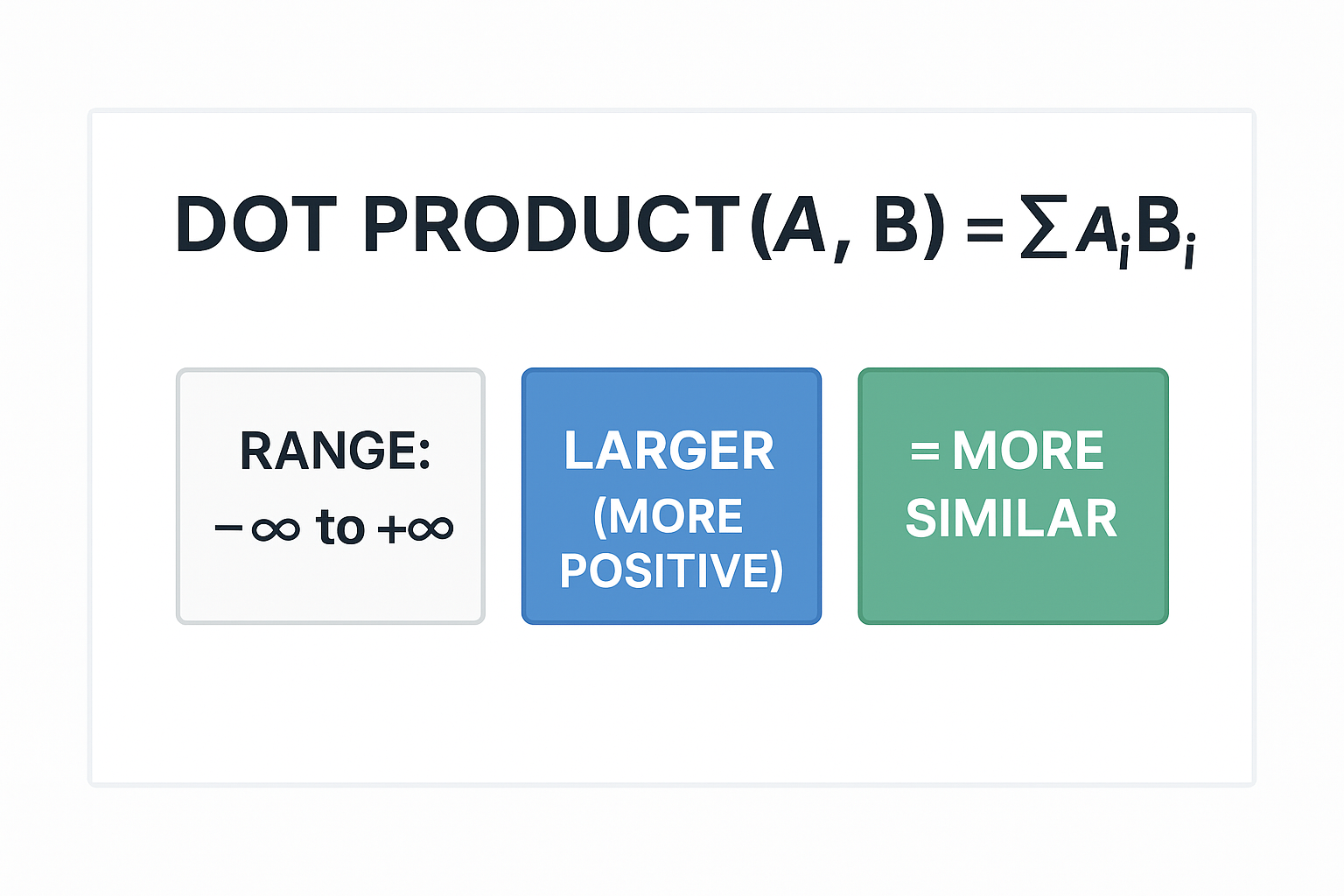

3. Dot Product

Simple multiplication of corresponding dimensions, summed.

View original ASCII

dot_product(A, B) = Σ(Aᵢ × Bᵢ)Range: -∞ to +∞ Larger (more positive) = more similar

Comparison:

| Metric | Best For | Sensitive To | Speed |

|---|---|---|---|

| Cosine | Text, normalized embeddings | Direction only | Medium |

| Euclidean | Images, spatial data | Magnitude and direction | Fast |

| Dot Product | Pre-normalized vectors | Both magnitude and direction | Fastest |

💡 Practical Tip: For most semantic search applications, cosine similarity is the default choice. Many vector databases optimize specifically for cosine calculations.

The Semantic Search Pipeline 🔄

Here's how semantic search systems process queries and retrieve results:

┌─────────────────────────────────────────────────────────────┐

│ SEMANTIC SEARCH PIPELINE │

└─────────────────────────────────────────────────────────────┘

📝 Query: "best budget smartphones"

│

↓

🤖 STEP 1: Query Encoding

│ (Embedding model converts to vector)

↓

[0.23, -0.41, 0.67, ..., 0.15] ← Query vector

│

↓

🔍 STEP 2: Vector Search

│ (Compare against document embeddings)

│

┌──────┴──────┬──────────┬──────────┐

│ │ │ │

↓ ↓ ↓ ↓

Doc1 Doc2 Doc3 Doc4

0.92 0.87 0.45 0.23 ← Similarity scores

(phones) (value) (cameras) (tablets)

│ │

↓ ↓

🏆 STEP 3: Ranking & Retrieval

│ (Top K most similar)

↓

📊 Results:

1. "Top affordable phones in 2026" (0.92)

2. "Value-for-money mobile devices" (0.87)

Pipeline Components:

- Encoder: Neural network that converts text to embeddings (same model for both queries and documents)

- Vector Store: Database optimized for storing and searching high-dimensional vectors (e.g., Pinecone, Weaviate, FAISS)

- Retriever: Component that performs similarity search and ranks results

- Post-processor: Optional reranking or filtering based on metadata, recency, or business rules

🔧 Implementation Note: The encoder must be consistent—queries and documents must use the same embedding model to ensure vectors exist in the same semantic space.

Dense vs. Sparse Retrieval 🎯

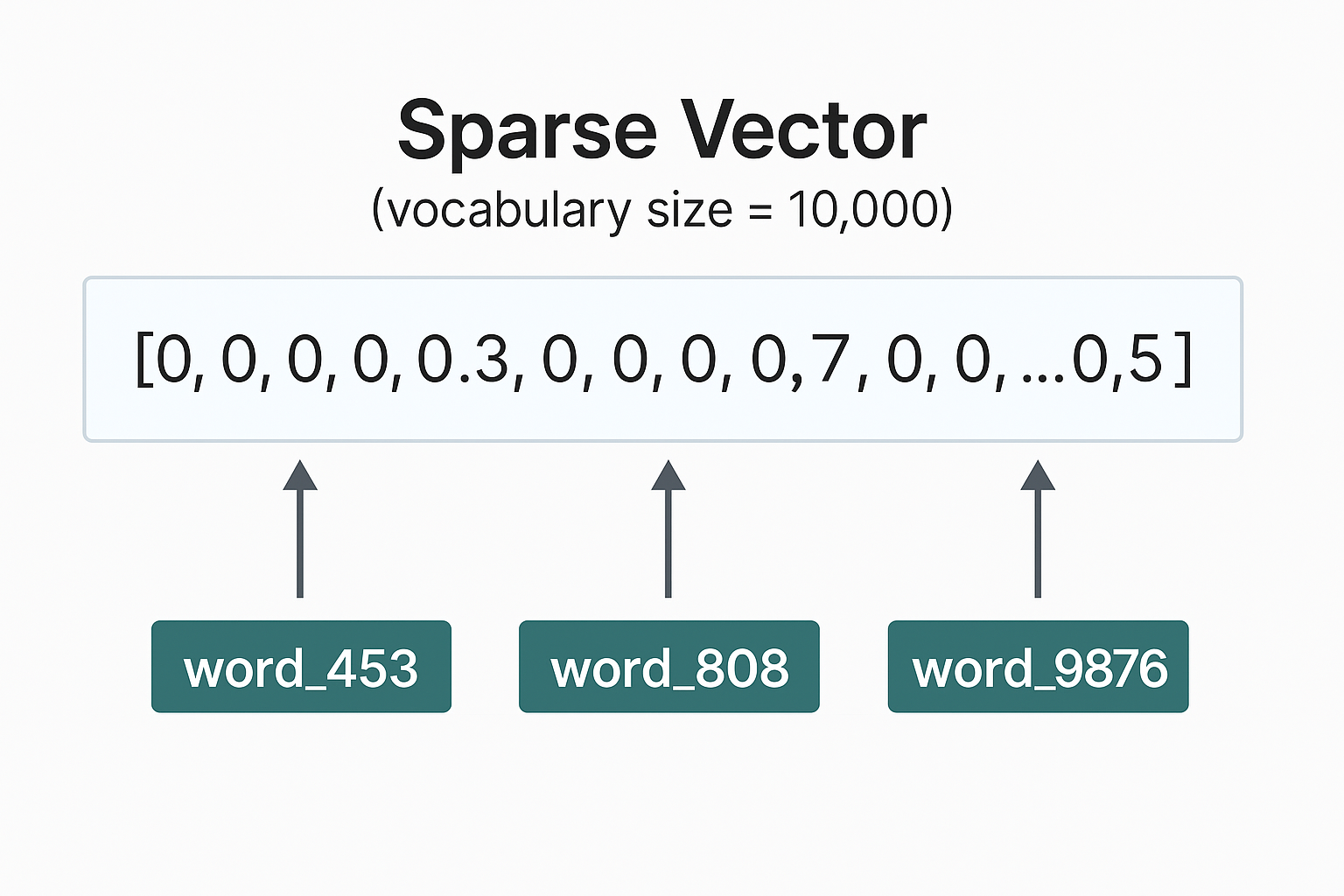

Sparse Retrieval (Traditional)

- Represents documents as sparse vectors (mostly zeros)

- Examples: TF-IDF, BM25

- Fast, interpretable, works well for exact term matching

- Struggles with synonyms and semantic relationships

View original ASCII

Sparse Vector (vocabulary size = 10,000):

[0, 0, 0, 0.3, 0, 0, 0, 0.7, 0, 0, ..., 0, 0.5, 0]

↑ ↑ ↑

word_453 word_808 word_9876

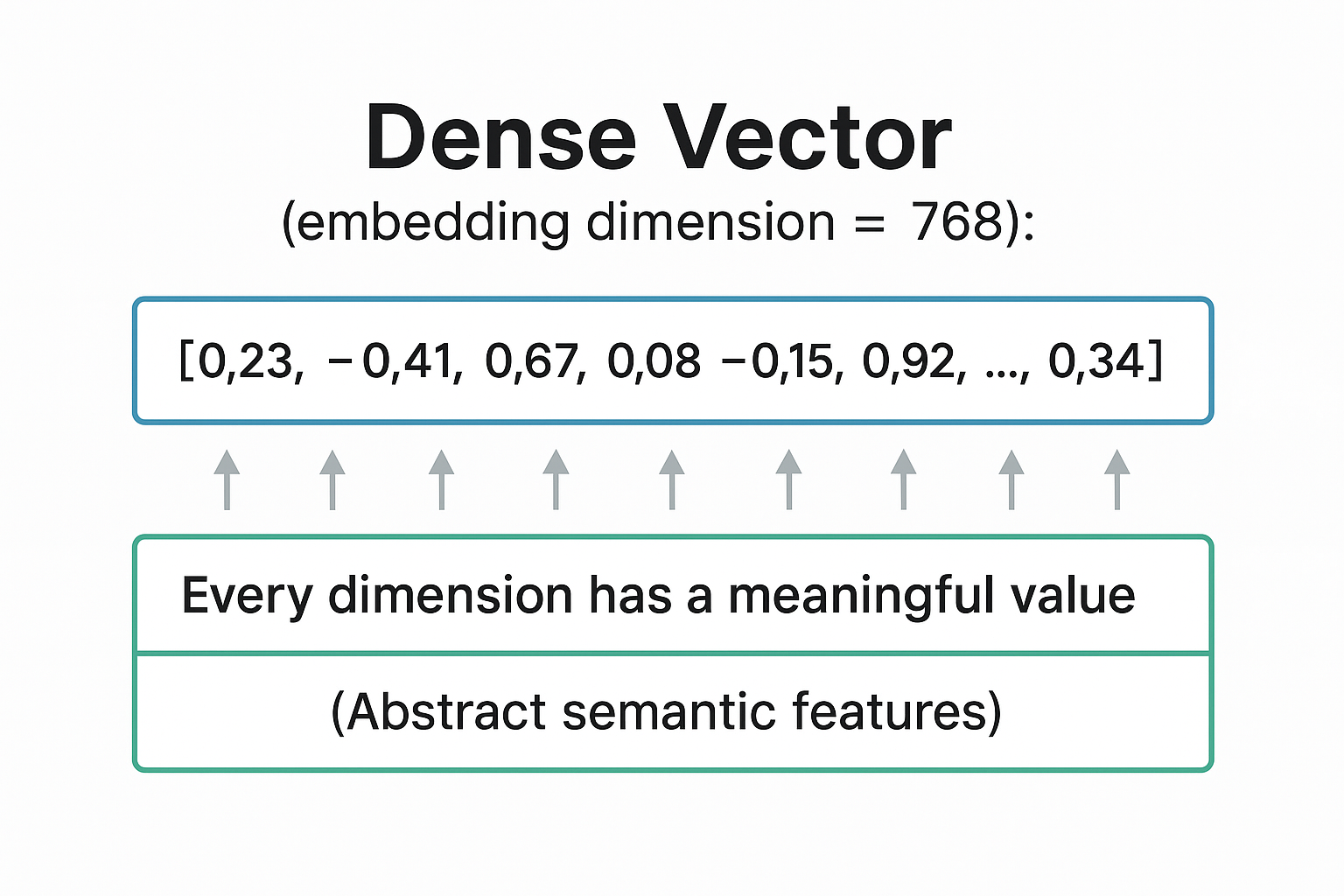

Dense Retrieval (Modern Semantic)

- Represents documents as dense vectors (all positions have values)

- Examples: BERT, Sentence Transformers

- Captures semantic meaning, handles paraphrasing

- Requires more compute and training data

View original ASCII

Dense Vector (embedding dimension = 768): [0.23, -0.41, 0.67, 0.08, -0.15, 0.92, ..., 0.34, -0.19] ↑ ↑ ↑ ↑ ↑ ↑ ↑ ↑ Every dimension has a meaningful value (Abstract semantic features)

Hybrid Search: Many production systems combine both approaches:

- Use sparse retrieval for exact keyword matching

- Use dense retrieval for semantic understanding

- Combine scores for optimal results

| Scenario | Sparse Works Better | Dense Works Better |

|---|---|---|

| Query Type | "SKU-12345", exact product codes | "comfortable running shoes for beginners" |

| Domain | Legal documents (exact terminology) | Customer support (varied phrasings) |

| Data Volume | Millions of short documents | Thousands of detailed documents |

Neural Retrieval Models 🧬

Modern semantic search relies on neural retrieval models—deep learning architectures trained specifically for semantic similarity.

Key Architectures:

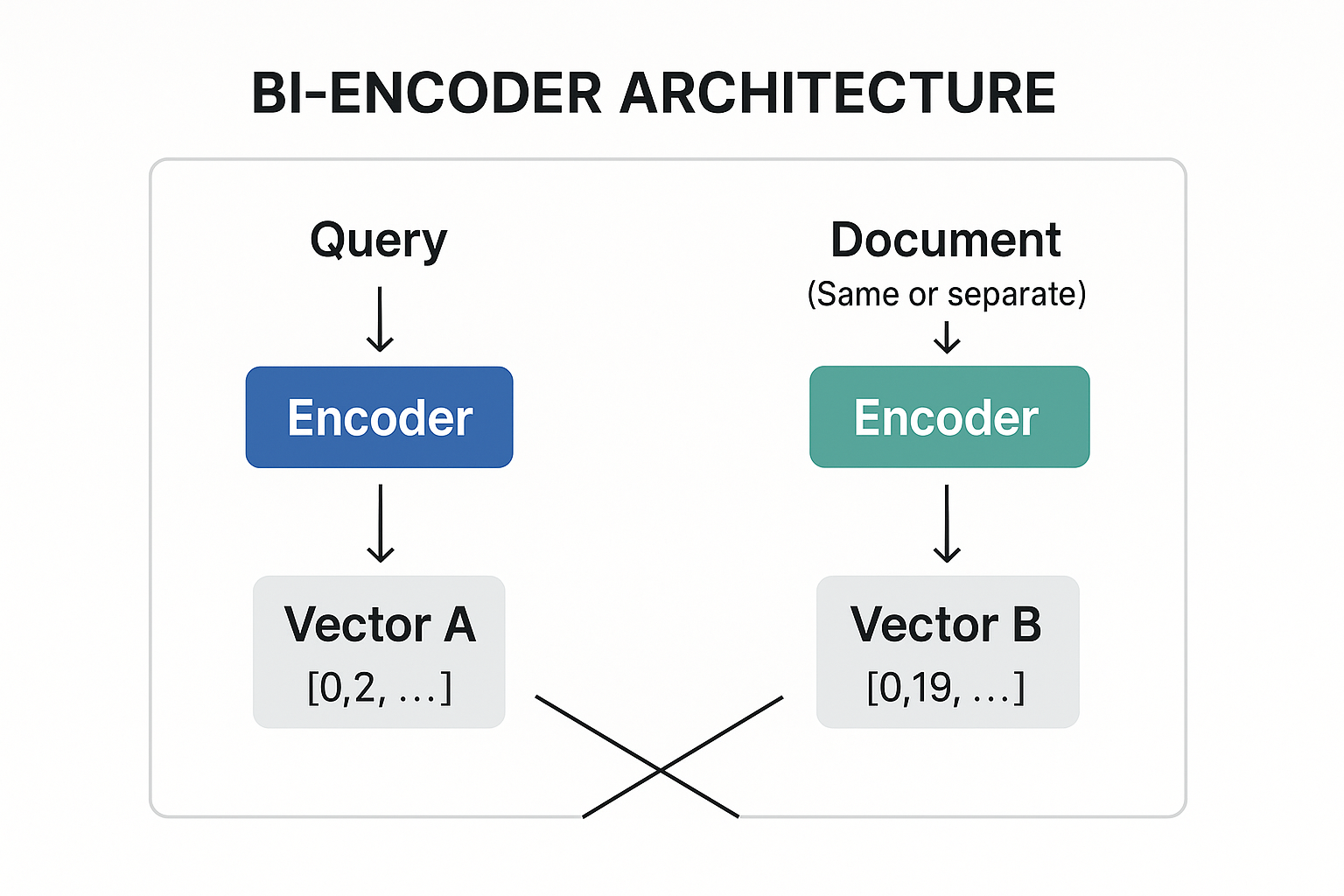

1. Bi-Encoder (Two-Tower Model)

View original ASCII

┌──────────────────────────────────────────┐ │ BI-ENCODER ARCHITECTURE │ └──────────────────────────────────────────┘Query Document │ │ ↓ ↓ ┌────────┐ ┌────────┐ │Encoder │ │Encoder │ (Same or separate) │ 🧠 │ │ 🧠 │ └────────┘ └────────┘ │ │ ↓ ↓ Vector A Vector B [0.2, ...] [0.19, ...] \ ╱ ╲ ╱ ↓ ↓ Similarity Score (cosine)

✅ Pros: Fast at inference (encode documents once, store vectors) ❌ Cons: No interaction between query and document during encoding

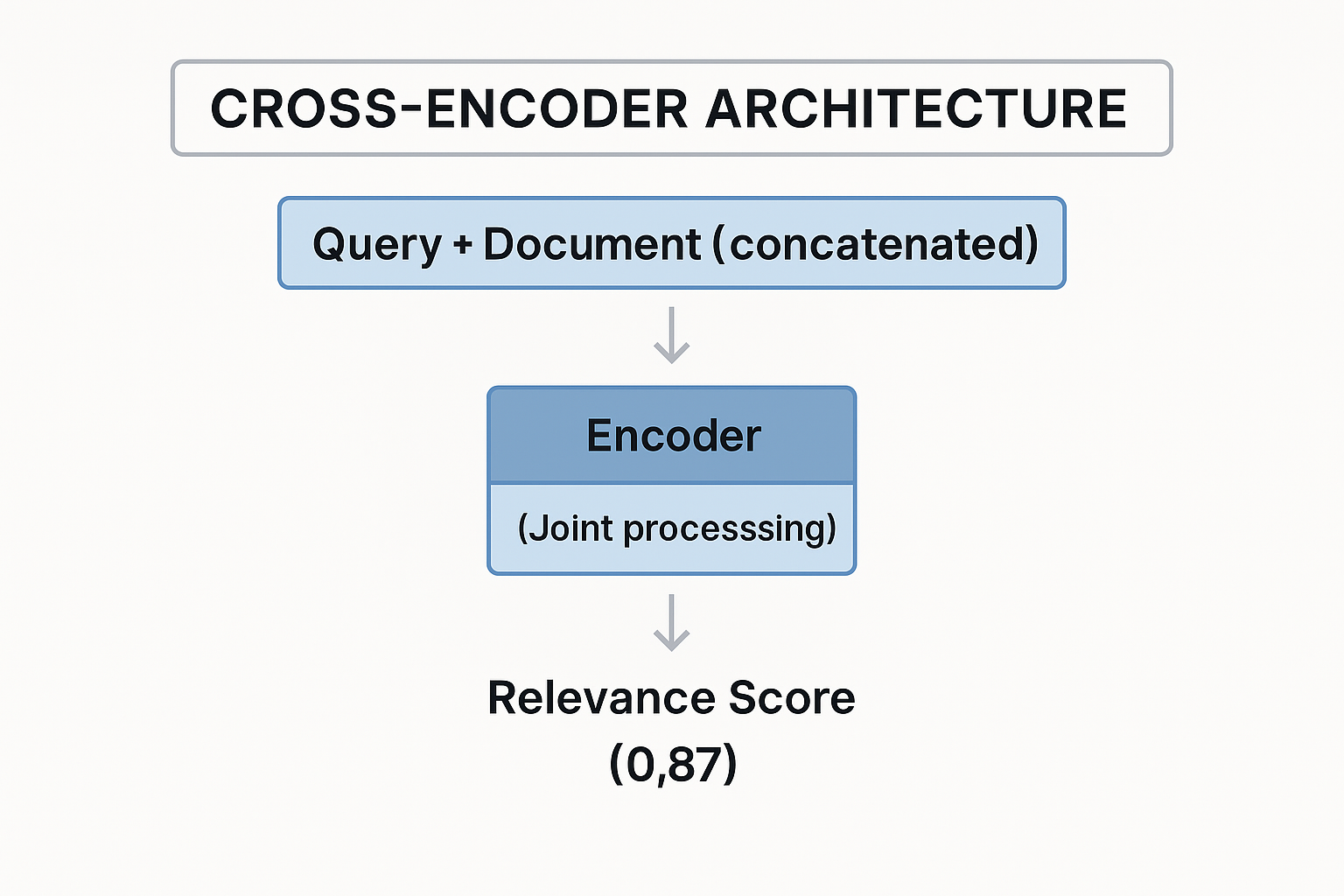

2. Cross-Encoder

View original ASCII

┌──────────────────────────────────────────┐ │ CROSS-ENCODER ARCHITECTURE │ └──────────────────────────────────────────┘Query + Document (concatenated) │ ↓ ┌─────────────┐ │ Encoder │ (Joint processing) │ 🧠 │ └─────────────┘ │ ↓ Relevance Score (0.87)

✅ Pros: More accurate (sees both inputs simultaneously) ❌ Cons: Slow (must re-encode every query-document pair)

Best Practice: Use bi-encoders for initial retrieval (fast, finds top 100), then re-rank with cross-encoders (accurate, narrows to top 10).

🤔 Did you know? Google's BERT update in 2019 was primarily a semantic search improvement, helping the search engine understand conversational queries and long-tail searches.

Examples: Semantic Search in Action 💼

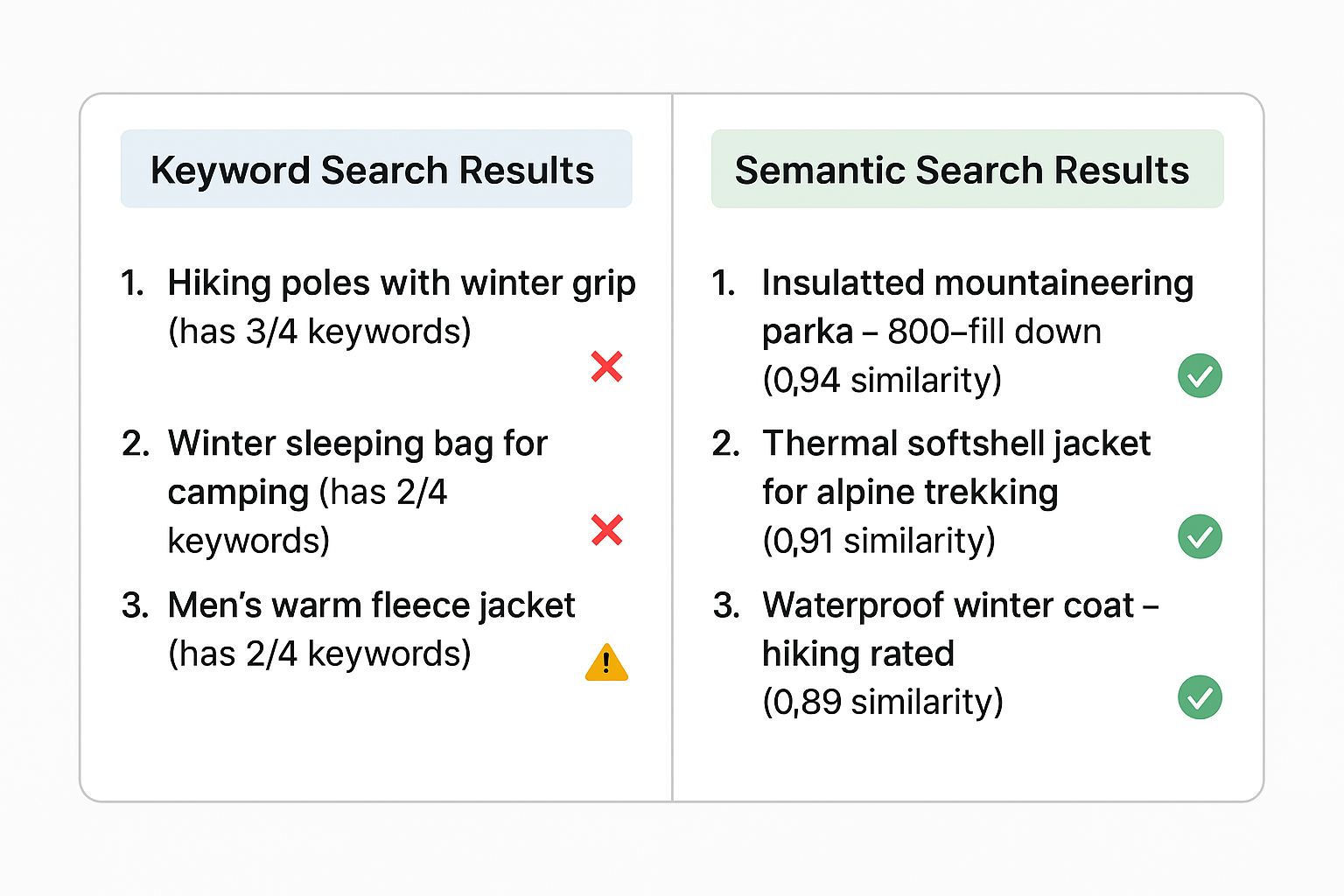

Example 1: E-Commerce Product Search

Scenario: A customer searches for "warm winter jacket for hiking" on an outdoor gear website.

Traditional Keyword Approach:

- Searches for exact terms: "warm", "winter", "jacket", "hiking"

- Misses products described as "insulated outdoor parka" or "thermal mountaineering coat"

- Returns irrelevant results containing individual keywords (e.g., "summer hiking guide" + "winter sleeping bag")

Semantic Search Approach:

| Step | Process | Result |

|---|---|---|

| 1 | Query embedding generated | Vector captures concepts: cold-weather, outdoor activity, upper-body garment |

| 2 | Compare against product embeddings | Finds similar items regardless of exact wording |

| 3 | Rank by similarity + business rules | Top results include "insulated parka", "thermal coat", "fleece-lined jacket" |

Results Comparison:

View original ASCII

🔴 Keyword Search Results: 1. "Hiking poles with winter grip" (has 3/4 keywords) ❌ 2. "Winter sleeping bag for camping" (has 2/4 keywords) ❌ 3. "Men's warm fleece jacket" (has 2/4 keywords) ⚠️🟢 Semantic Search Results:

- "Insulated mountaineering parka - 800-fill down" (0.94 similarity) ✅

- "Thermal softshell jacket for alpine trekking" (0.91 similarity) ✅

- "Waterproof winter coat - hiking rated" (0.89 similarity) ✅

💡 Business Impact: Semantic search increases conversion rates by 15-25% in e-commerce by showing truly relevant products even when customers use different terminology than product descriptions.

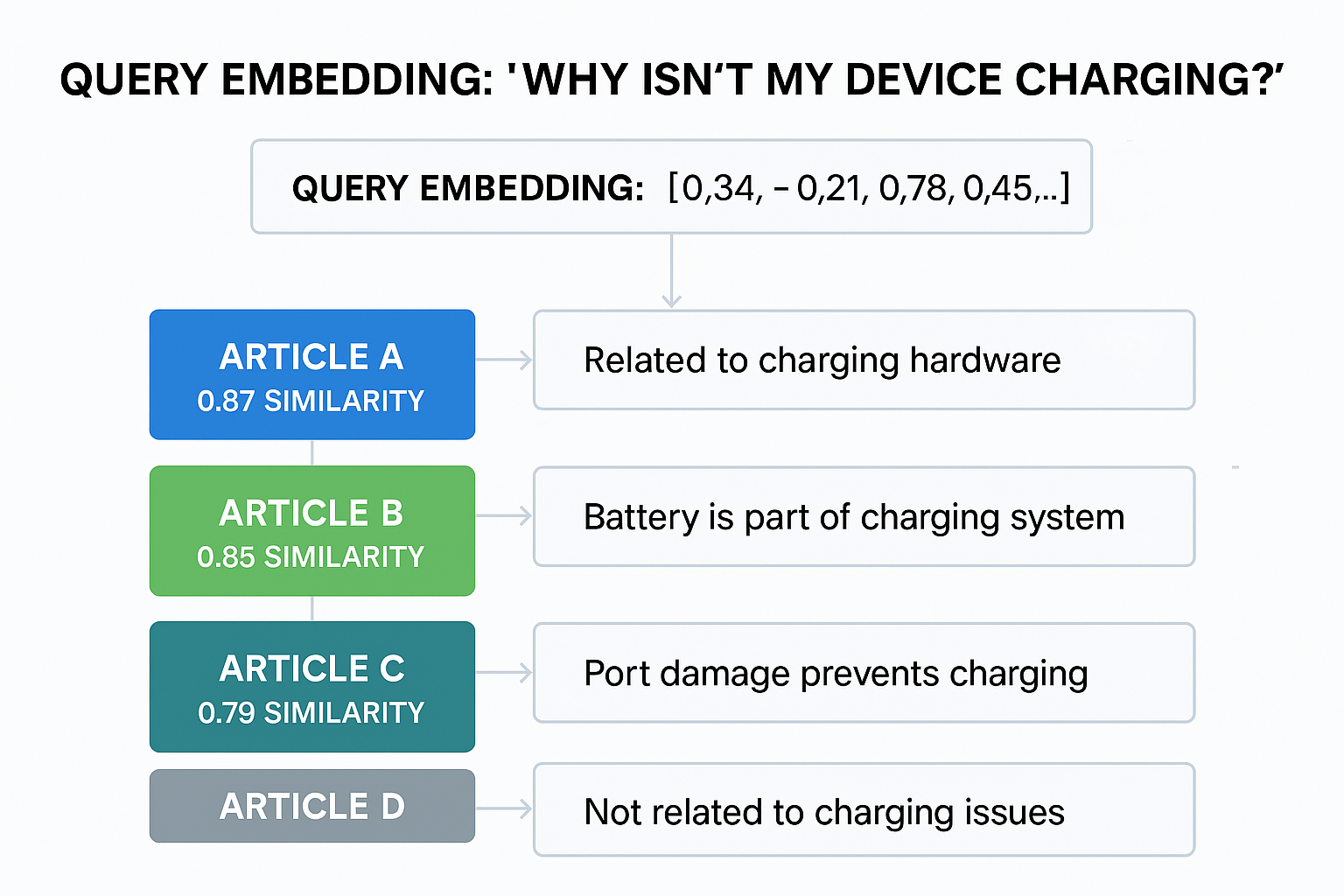

Example 2: Customer Support Knowledge Base

Scenario: A user asks, "Why isn't my device charging?" in a tech support chatbot.

Knowledge Base Articles (simplified):

- Article A: "Troubleshooting power adapter issues"

- Article B: "Battery not holding charge - solutions"

- Article C: "USB-C port damage and repair"

- Article D: "Software update instructions"

Semantic Matching Process:

View original ASCII

Query Embedding: "Why isn't my device charging?"

↓ [0.34, -0.21, 0.78, 0.45, ...]

│

├─→ Article A: 0.87 similarity ← Related to charging hardware

├─→ Article B: 0.85 similarity ← Battery is part of charging system

├─→ Article C: 0.79 similarity ← Port damage prevents charging

└─→ Article D: 0.23 similarity ← Not related to charging issues

Why It Works:

- The query doesn't contain "power adapter", "battery", or "USB-C" explicitly

- Semantic understanding recognizes that "charging" relates to these concepts

- Articles B and C would be missed by keyword search despite being highly relevant

🔧 Implementation Detail: The same embedding model must encode both the user's question and all knowledge base articles. Models like Sentence-BERT or OpenAI's text-embedding-ada-002 are commonly used.

Example 3: Academic Research Paper Discovery

Scenario: A researcher searches for "methods to improve neural network training speed" in a research database.

Traditional Search Issues:

- Misses papers using different terminology: "accelerating deep learning optimization", "faster convergence techniques"

- Can't understand the conceptual connection between "learning rate scheduling" and "training speed"

Semantic Search Solution:

| Query Concept | Semantically Related Papers Found | Similarity Score |

|---|---|---|

| "improve training speed" | "Mixed precision training for faster GPU computation" | 0.91 |

| "Gradient accumulation reduces training time" | 0.88 | |

| "Distributed data parallel architectures" | 0.85 | |

| "neural network" | "Transformer model optimization strategies" | 0.89 |

| "CNN acceleration on mobile devices" | 0.87 |

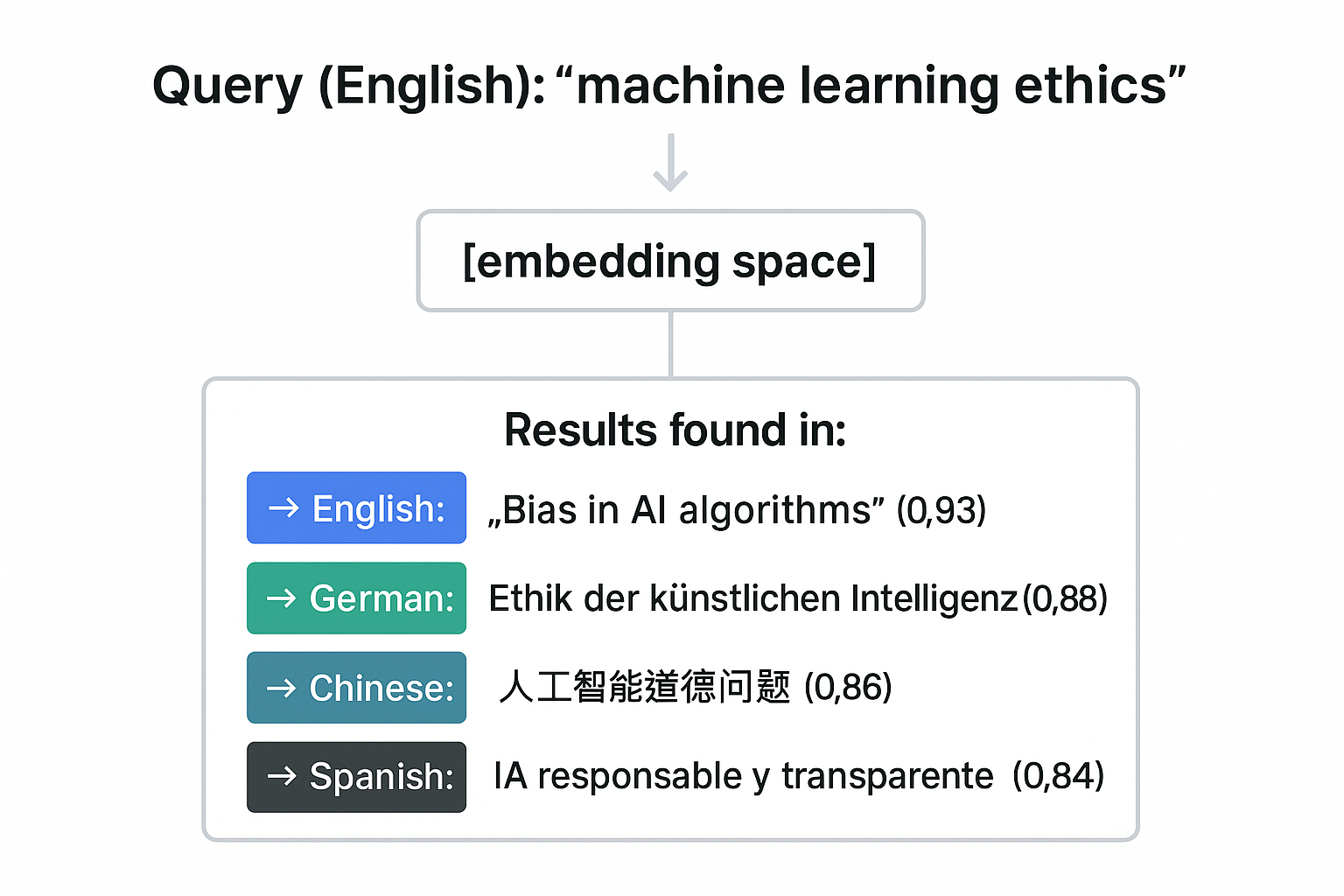

Advanced Feature - Cross-Lingual Semantic Search:

Multilingual embedding models (e.g., multilingual BERT) enable searching across languages:

View original ASCII

Query (English): "machine learning ethics"

↓

[embedding space]

│

Results found in:

→ English: "Bias in AI algorithms" (0.93)

→ German: "Ethik der künstlichen Intelligenz" (0.88)

→ Chinese: "人工智能道德问题" (0.86)

→ Spanish: "IA responsable y transparente" (0.84)

🌍 Real-World Application: Platforms like Semantic Scholar and Google Scholar use semantic search to connect researchers across language barriers and vocabulary differences.

Example 4: Legal Document Retrieval

Scenario: An attorney needs to find precedents related to "employer liability for remote worker accidents."

Challenge: Legal documents use varied terminology—"telecommuting", "work-from-home", "virtual workspace", "home office injuries", "vicarious liability".

Hybrid Approach (combining sparse + dense):

┌────────────────────────────────────────────────────────┐

│ HYBRID LEGAL SEARCH PIPELINE │

└────────────────────────────────────────────────────────┘

Query: "employer liability for remote worker accidents"

│

├──────────────┬──────────────┐

↓ ↓ ↓

BM25 (sparse) BERT (dense) Metadata

Keywords Semantics (Date, jurisdiction)

│ │ │

↓ ↓ ↓

Score: 0.45 Score: 0.82 Filter: 2020+

│ │ │

└──────────────┴──────────────┘

│

↓

Combined Score: 0.67

│

↓

📑 Top Cases Retrieved:

1. "Home office injury - vicarious liability" (0.89)

2. "Telecommuting accident claims" (0.85)

3. "Remote work safety obligations" (0.82)

Why Hybrid?

- Sparse (BM25): Catches exact legal terms and citations ("§ 831 BGB", case numbers)

- Dense (BERT): Understands conceptual relationships between "remote worker" and "telecommuting"

- Metadata: Filters by relevance factors like date and jurisdiction

💡 Professional Tip: Legal and medical domains often benefit most from hybrid search because they require both precise terminology matching and semantic understanding.

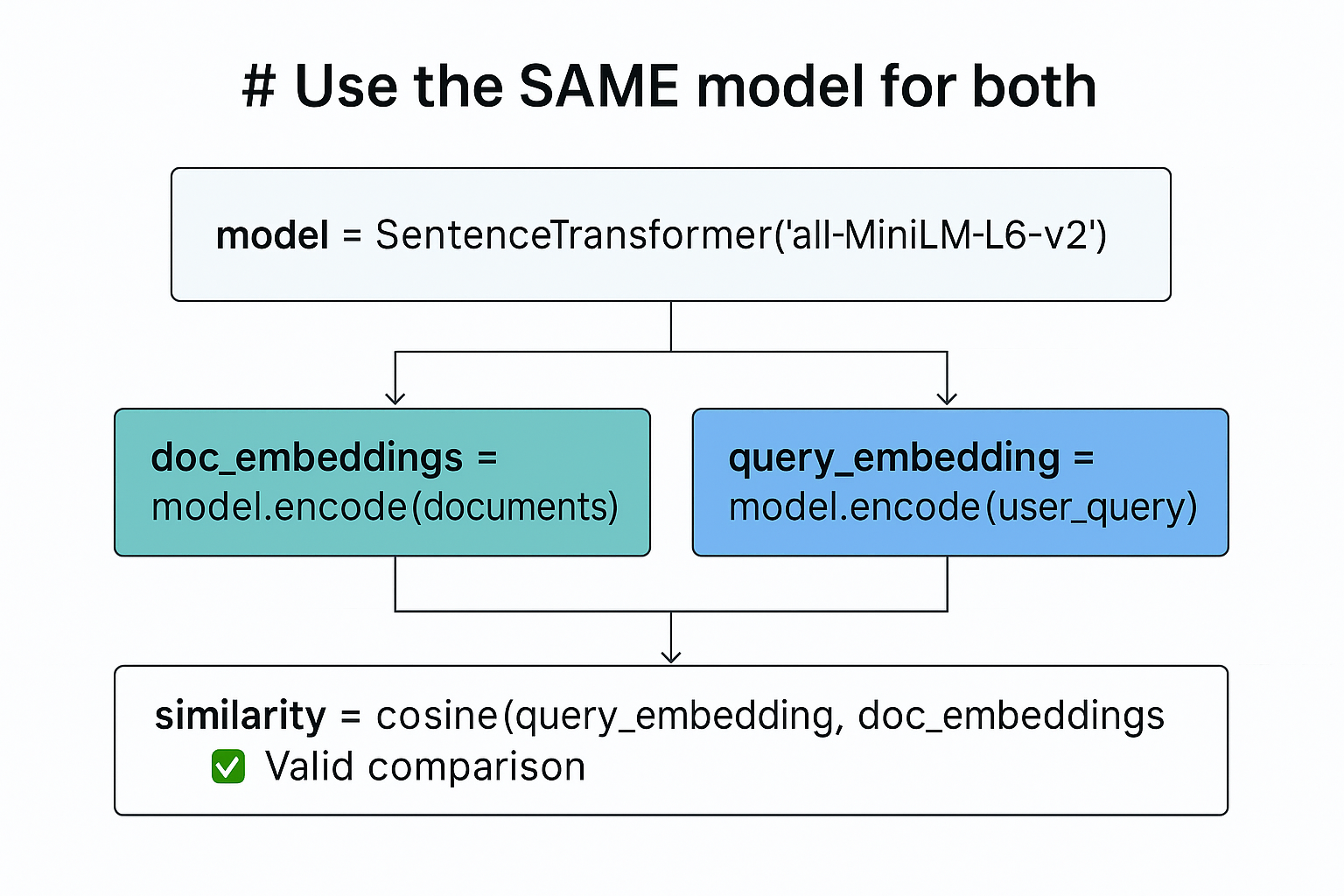

Common Mistakes ⚠️

Mistake 1: Using Inconsistent Embedding Models

❌ Wrong Approach:

## Encode documents with model A

doc_embeddings = modelA.encode(documents)

## Later, encode query with model B

query_embedding = modelB.encode(user_query)

## Compare embeddings from different models

similarity = cosine(query_embedding, doc_embeddings) # ❌ Meaningless!

✅ Correct Approach:

View original ASCII

# Use the SAME model for both

model = SentenceTransformer('all-MiniLM-L6-v2')

doc_embeddings = model.encode(documents)

query_embedding = model.encode(user_query)

similarity = cosine(query_embedding, doc_embeddings) # ✅ Valid comparison

Why It Matters: Embeddings from different models exist in completely different vector spaces. Comparing them is like measuring distance in miles vs. kilometers without conversion—the numbers are meaningless.

Mistake 2: Ignoring Context Window Limitations

❌ Wrong Approach:

## Try to embed a 5,000-word document directly

long_document = "..." * 5000 # Very long text

embedding = model.encode(long_document) # ❌ Truncated or error!

✅ Correct Approach:

## Chunk long documents appropriately

chunks = split_into_chunks(long_document, max_length=512)

chunk_embeddings = [model.encode(chunk) for chunk in chunks]

## Option 1: Search at chunk level

## Option 2: Pool chunk embeddings (average, max, etc.)

doc_embedding = np.mean(chunk_embeddings, axis=0)

Context Limits by Model:

- BERT: 512 tokens (~400 words)

- Sentence-BERT: 256-512 tokens

- OpenAI Ada-002: 8,191 tokens (~6,000 words)

- GPT-4 Embeddings: 8,191 tokens

⚠️ Warning: Text beyond the limit is either truncated (losing information) or causes errors. Always check your model's maximum input length.

Mistake 3: Not Normalizing Embeddings for Cosine Similarity

❌ Inefficient Approach:

## Calculate cosine similarity the long way

def cosine_slow(a, b):

return np.dot(a, b) / (np.linalg.norm(a) * np.linalg.norm(b))

✅ Optimized Approach:

## Normalize embeddings once during indexing

embeddings = embeddings / np.linalg.norm(embeddings, axis=1, keepdims=True)

## Now cosine similarity = dot product (much faster!)

scores = np.dot(query_embedding, embeddings.T) # ✅ Fast batch computation

Performance Impact: For 1 million documents, normalized embeddings can be 10-100x faster because dot product avoids repeated square root calculations.

Mistake 4: Forgetting to Update Embeddings

❌ Static Problem:

## Index documents once, never update

initial_docs = ["doc1", "doc2", "doc3"]

index.add(model.encode(initial_docs)) # ❌ What about new/updated docs?

## 6 months later: index is stale, new products missing

✅ Dynamic Solution:

## Implement incremental indexing

class DynamicIndex:

def add_document(self, doc):

embedding = model.encode(doc)

self.index.add(embedding, doc_id) # ✅ Updates continuously

def update_document(self, doc_id, new_doc):

self.index.remove(doc_id)

self.add_document(new_doc) # ✅ Keeps embeddings fresh

Update Strategy:

- Real-time: Encode and index immediately (e-commerce products)

- Batch: Nightly re-indexing (news articles)

- Hybrid: Real-time adds + periodic full refresh (large corpora)

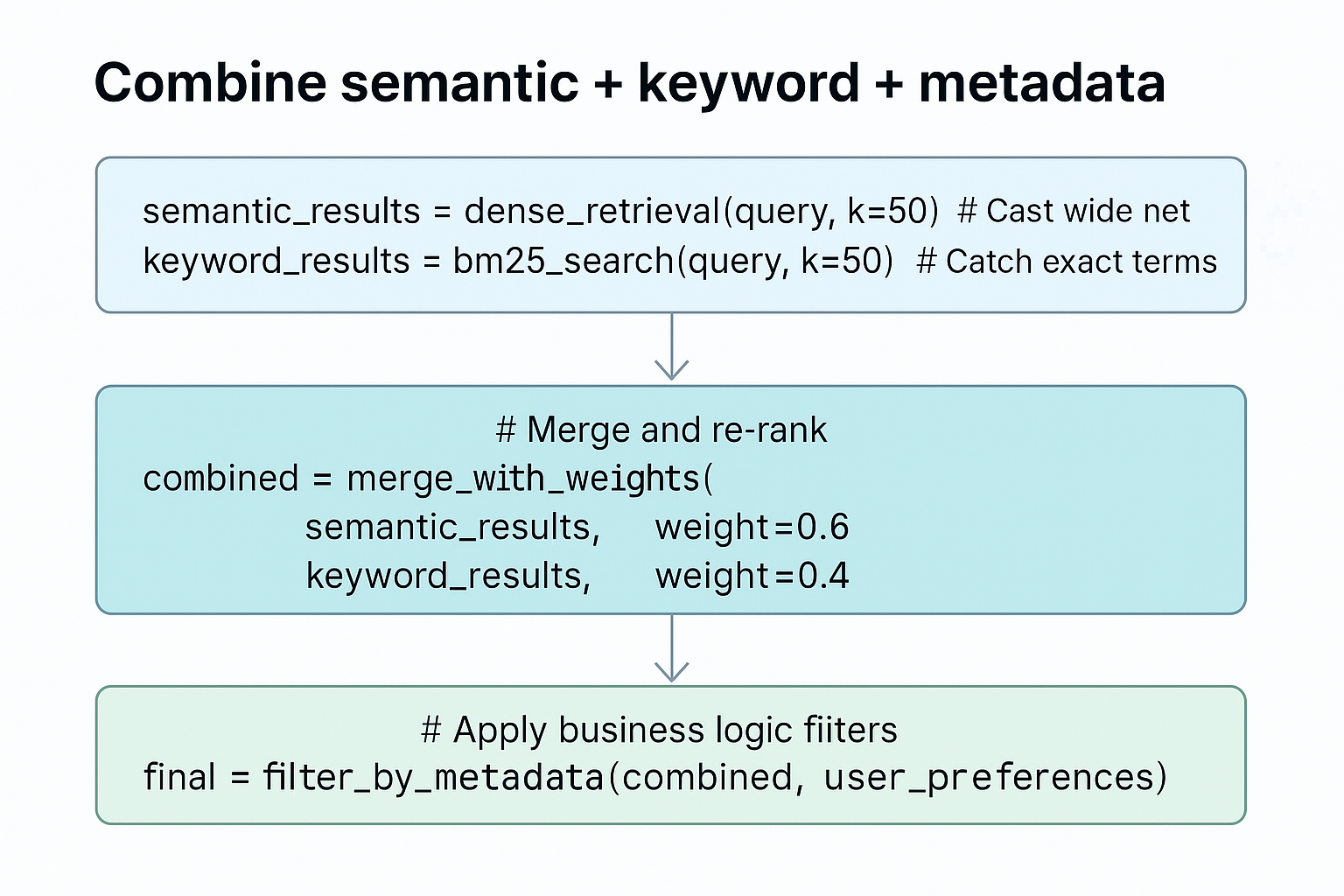

Mistake 5: Over-Relying on Semantic Search Alone

❌ Pure Semantic Approach:

## Only use semantic similarity

results = semantic_search(query, top_k=10) # ❌ Misses exact matches!

✅ Balanced Approach:

View original ASCII

# Combine semantic + keyword + metadata semantic_results = dense_retrieval(query, k=50) # Cast wide net keyword_results = bm25_search(query, k=50) # Catch exact termsMerge and re-rank

combined = merge_with_weights( semantic_results, weight=0.6, keyword_results, weight=0.4 )

Apply business logic filters

final = filter_by_metadata(combined, user_preferences)

🎯 Best Practice: Use semantic search as a powerful component within a larger retrieval system, not as the only method.

Key Takeaways 🎓

📋 Quick Reference Card

| Concept | Key Point |

| 🎯 Semantic Search | Retrieval based on meaning and intent, not just keywords |

| 📐 Vector Embeddings | Numerical representations capturing semantic content (384-1536 dimensions typical) |

| 📏 Cosine Similarity | Standard metric for text similarity; range -1 to +1, typically 0.7+ is high match |

| 🏗️ Bi-Encoder | Fast at scale (encode once, store vectors); use for initial retrieval |

| 🎯 Cross-Encoder | More accurate (joint encoding); use for re-ranking top results |

| 🔄 Hybrid Search | Combines sparse (BM25) + dense (embeddings) for best results |

| ⚠️ Model Consistency | MUST use same embedding model for queries and documents |

| 📊 Context Limits | Chunk long documents; typical limit: 512 tokens (BERT) to 8K (modern models) |

| ⚡ Optimization | Normalize embeddings once → cosine similarity = dot product (much faster) |

| 🔄 Maintenance | Update embeddings regularly as content changes (real-time or batch) |

Core Principles to Remember:

Semantic search solves the vocabulary gap by understanding meaning beyond exact word matches

Embeddings are the foundation—they transform text into mathematical representations where similar concepts cluster together in vector space

Similarity metrics measure closeness—cosine similarity is the gold standard for text, focusing on direction rather than magnitude

Pipeline architecture matters—bi-encoders for speed (initial retrieval), cross-encoders for accuracy (re-ranking)

Hybrid approaches win in production—combine semantic search with keyword matching and business logic for optimal results

Consistency is critical—use the same embedding model throughout, respect context limits, and keep embeddings updated

Mental Model 🧠

Think of semantic search as a library organized by concepts rather than alphabetically:

- Traditional search = card catalog (find exact title matches)

- Semantic search = knowledgeable librarian (understands what you're really looking for)

When you ask for "books about overcoming adversity," the librarian knows to show you biographies, self-help, and inspirational fiction—even if none contain that exact phrase.

When to Use Semantic Search:

✅ Great for:

- Natural language queries ("How do I...", "What's the best...")

- Multilingual or cross-lingual search

- Recommendation systems

- Question answering

- Customer support automation

- Research and discovery

❌ Not ideal for:

- Exact identifier lookups (SKUs, case numbers)

- Code search (syntax-sensitive)

- Ultra-low latency requirements (<10ms)

- Extremely limited compute resources

Next Steps in Your Learning Journey:

- Practice: Implement basic semantic search with Sentence-BERT or OpenAI embeddings

- Experiment: Compare cosine vs. dot product vs. Euclidean on your dataset

- Build: Create a hybrid system combining BM25 and dense retrieval

- Optimize: Learn approximate nearest neighbor algorithms (HNSW, IVF) for scale

- Advanced: Explore re-ranking models and query expansion techniques

📚 Further Study

Official Documentation & Tutorials:

- Sentence-BERT: Sentence Transformers Library - https://www.sbert.net/

- Pinecone Learning Center: Semantic Search Guide - https://www.pinecone.io/learn/semantic-search/

Research Papers & Deep Dives:

- "Efficient Natural Language Response Suggestion for Smart Reply" (Google, 2017) - Foundational semantic matching paper

- Weaviate Blog: Dense vs. Sparse Vectors Explained - https://weaviate.io/blog/dense-vs-sparse-vectors